October 09, 2025

2 min read

Key takeaways:

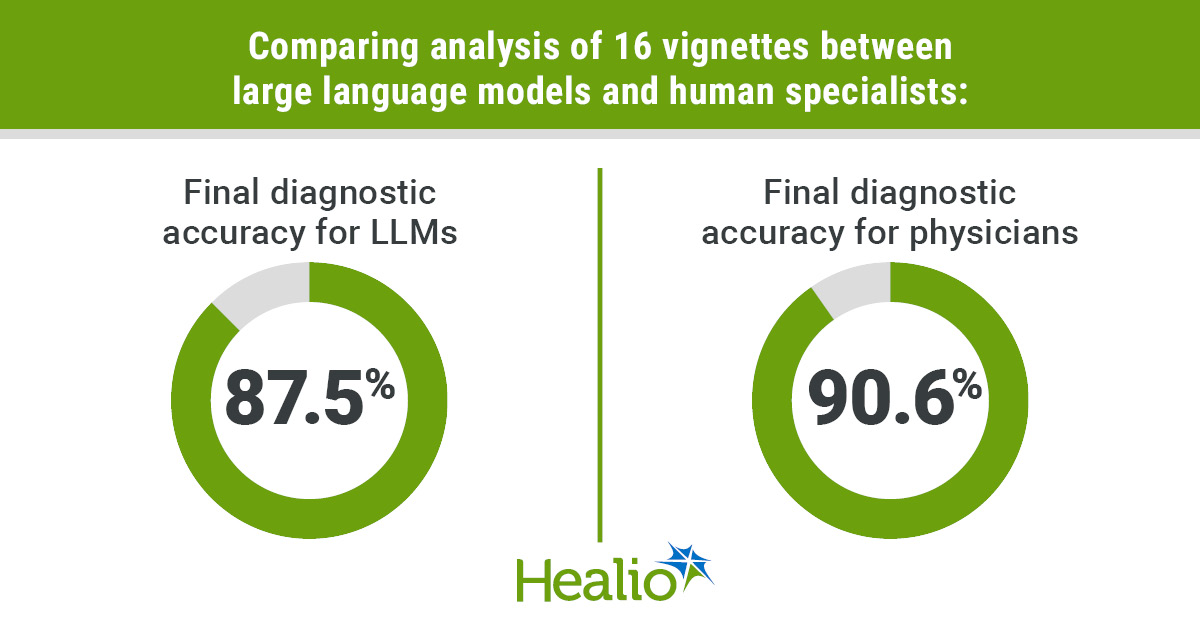

- Large language models performed better in differential diagnoses, but physicians had better final diagnosis accuracy.

- The researchers said the differences in endpoints were not statistically significant.

BALTIMORE — Three large language models were nonsuperior to three board-certified sleep physicians in diagnostic accuracy when analyzing a small cohort of clinical vignettes, according to a presenter.

“AI is a system which performs a task (that) normally requires human intelligence,” Anshum Patel, MBBS, clinical research fellow in the department of sleep medicine at the Mayo Clinic in Florida, said at the American Neurologic Association annual meeting.

Data were derived from Patel A, et al. Artificial intelligence in sleep medicine: Exploring the diagnostic accuracy of LLMs. Presented at: American Neurologic Association annual meeting; Sept. 13-16, 2025; Baltimore.

Machine learning is a subset of AI that enables systems to identify patterns directly from data, Patel said, just as medical residents learn diagnostic patterns from thousands of encounters with patients.

Deep learning is a subset of machine learning that uses complex neural networks to analyze intricate data such as medical images, he continued, like neuroradiologists identifying patterns in an MRI that generalists may miss.

Large language models (LLMs) are a subset of deep learning, Patel added. Trained on textual data, they can process and produce written language.

“So that being said, can LLMs reason through the case units like the physicians?” Patel said.

Patel and colleagues sought to investigate which source would perform better in differential diagnostic accuracy: three board-certified sleep physicians with 8 to 17 years of experience, or three LLMs: ChatGPT-4, Gemini 2.0 and DeepSeek.

The researchers asked the physicians and LLMs to analyze 16 diverse clinical vignettes from the 2019 Case Book of Sleep Medicine, published by the American Academy of Sleep Medicine (AASM), for the highest degree of accuracy.

The primary endpoints were comparison of the mean percentage of matched differential diagnoses from each AI entity against the AASM list for all 16 cases. The secondary endpoint was final diagnosis accuracy, measured on a Likert scale from 0 to 2 with 0 indicating “no match” and 2 as “full match.” The total score was then converted to an accuracy percentage.

Mean accuracy in differential diagnosis among LLMs was highest for Gemini 2.0 (77.7%) followed by ChatGPT-4 (76.9%) and DeepSeek (70.7%). For the three human experts, total matches were 48 of 70 (70.8%), 52 of 70 (74.3%) and 50 of 70 (73.5%) with an average accuracy for all three of 72.9%.

Regarding final diagnosis of the AASM sleep-related vignettes, all three LLMs recorded identical 87.5% accuracies, while the triumvirate of physicians recorded 90.6% overall (81.2% for physician A; 93.7% for B; 96.9% for C).

The researchers noted that neither difference between human and AI subjects for either endpoint was statistically significant, while acknowledging the small sample size and a static snapshot of data that is constantly evolving.

“LLMs are performing equally to the physicians, and they can serve as a powerful tool in clinical decision support,” Patel said.

Patel offered that future research into LLMs for diagnostic purposes may begin with fine-tuning their abilities with real-time diagnoses, or extend into dataset building, from which physicians can retrieve required information from LLMs as needed.

“Our ongoing work can show that these LLMs have been improving very rapidly, and we are approaching the performance plateau,” Patel said. “LLMs have been improving, and they will augment the clinical reasoning, but they will not replace (physicians).”

For more information:

Anshum Patel, MBBS, can be reached at patel.anusham@mayo.edu.